Red Pajama 2: The Public Dataset With a Whopping 30 Trillion Tokens

4.7 (307) In stock

4.7 (307) In stock

Together, the developer, claims it is the largest public dataset specifically for language model pre-training

Product & Engineering Archives - Pear VC

RedPajama-Data-v2: an Open Dataset with 30 Trillion Tokens for Training Large Language Models : r/LocalLLaMA

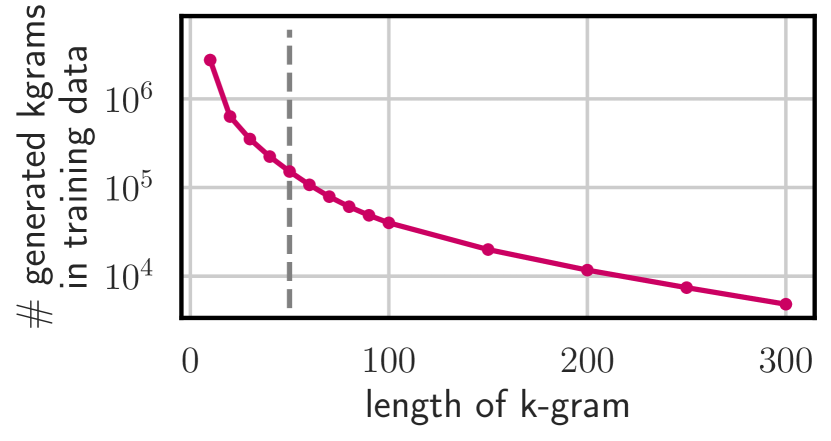

2311.17035] Scalable Extraction of Training Data from (Production) Language Models

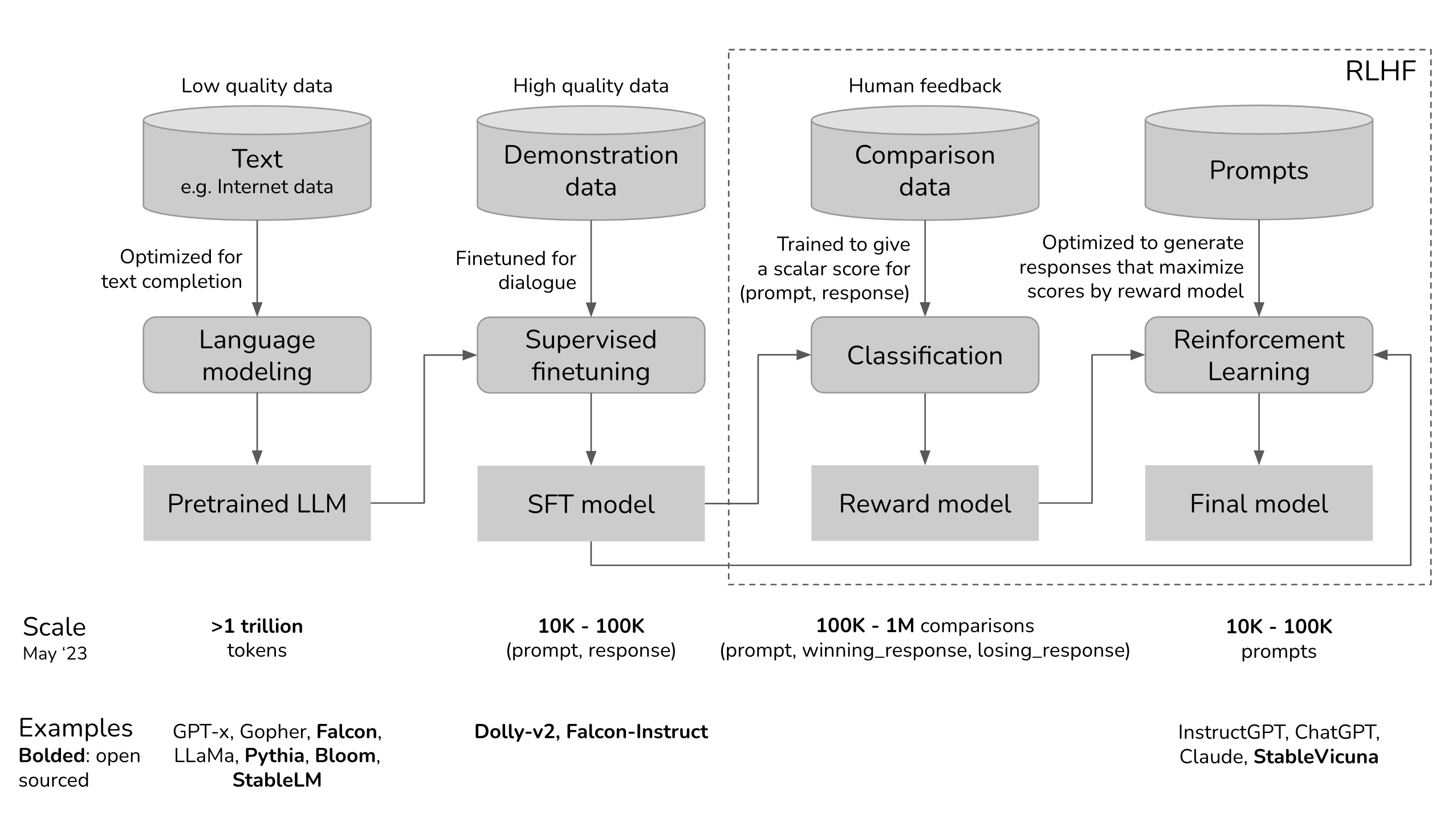

RLHF: Reinforcement Learning from Human Feedback

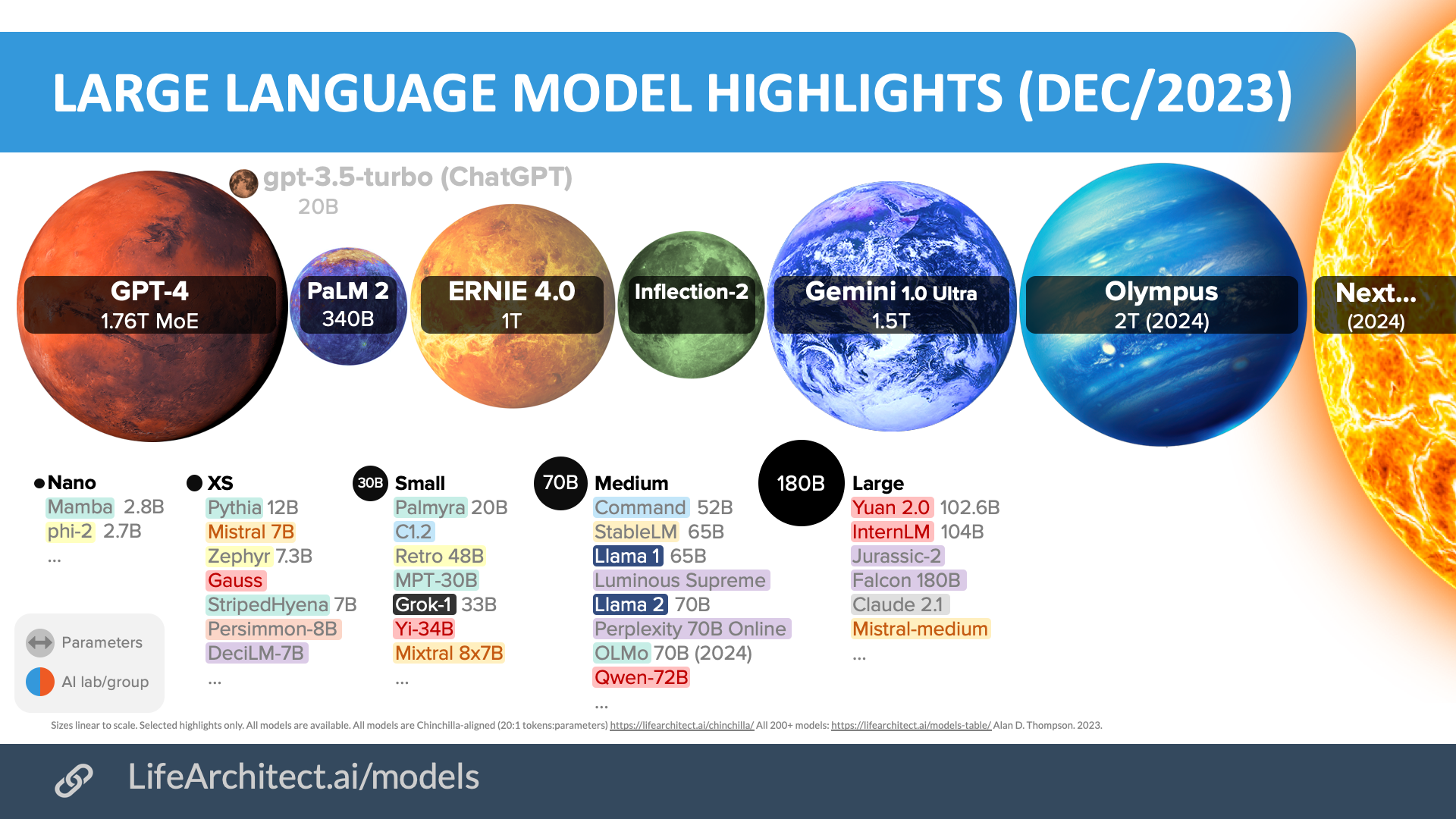

Integrated AI: The sky is comforting (2023 AI retrospective) – Dr Alan D. Thompson – Life Architect

RedPajama training progress at 440 billion tokens

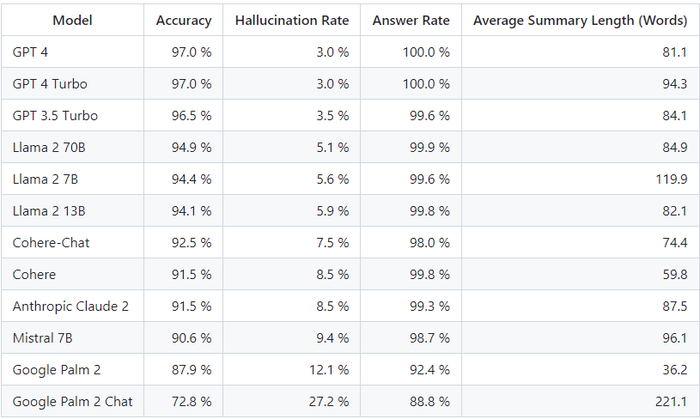

Leaderboard: OpenAI's GPT-4 Has Lowest Hallucination Rate

.png?width=700&auto=webp&quality=80&disable=upscale)

NLP recent news, page 7 of 30

togethercomputer/RedPajama-Data-1T · Datasets at Hugging Face

Leaderboard: OpenAI's GPT-4 Has Lowest Hallucination Rate