How to Measure FLOP/s for Neural Networks Empirically? – Epoch

4.5 (139) In stock

4.5 (139) In stock

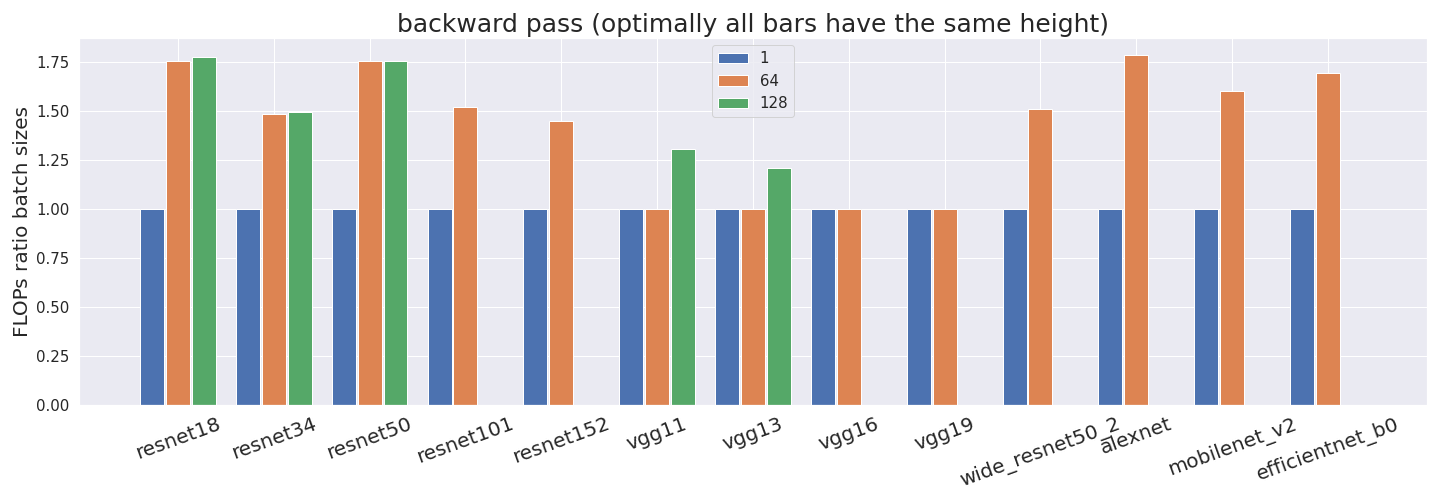

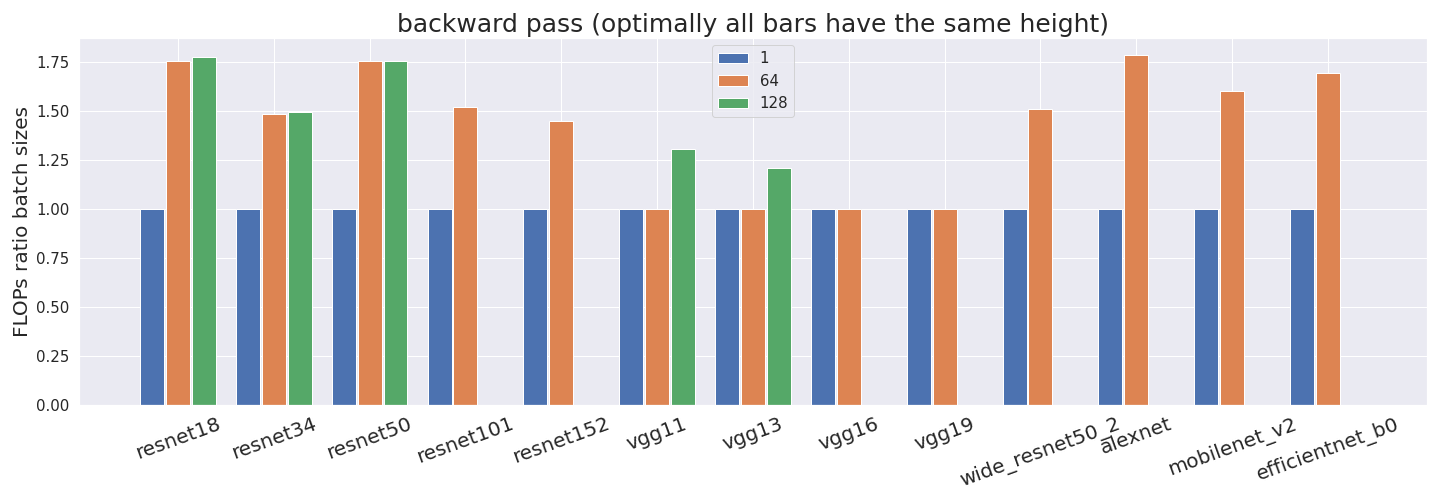

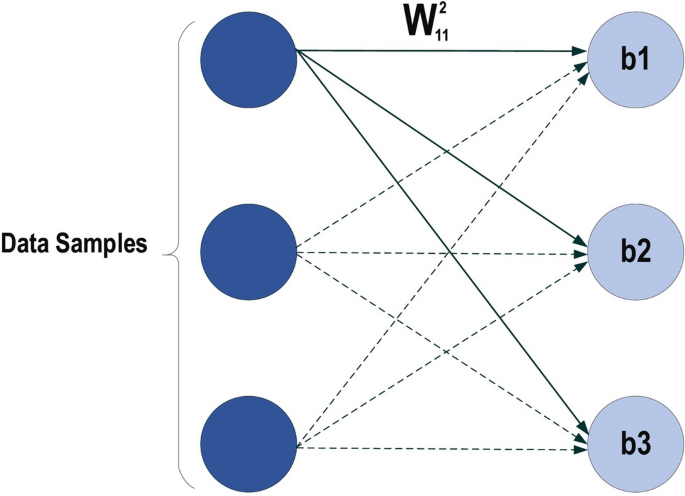

Computing the utilization rate for multiple Neural Network architectures.

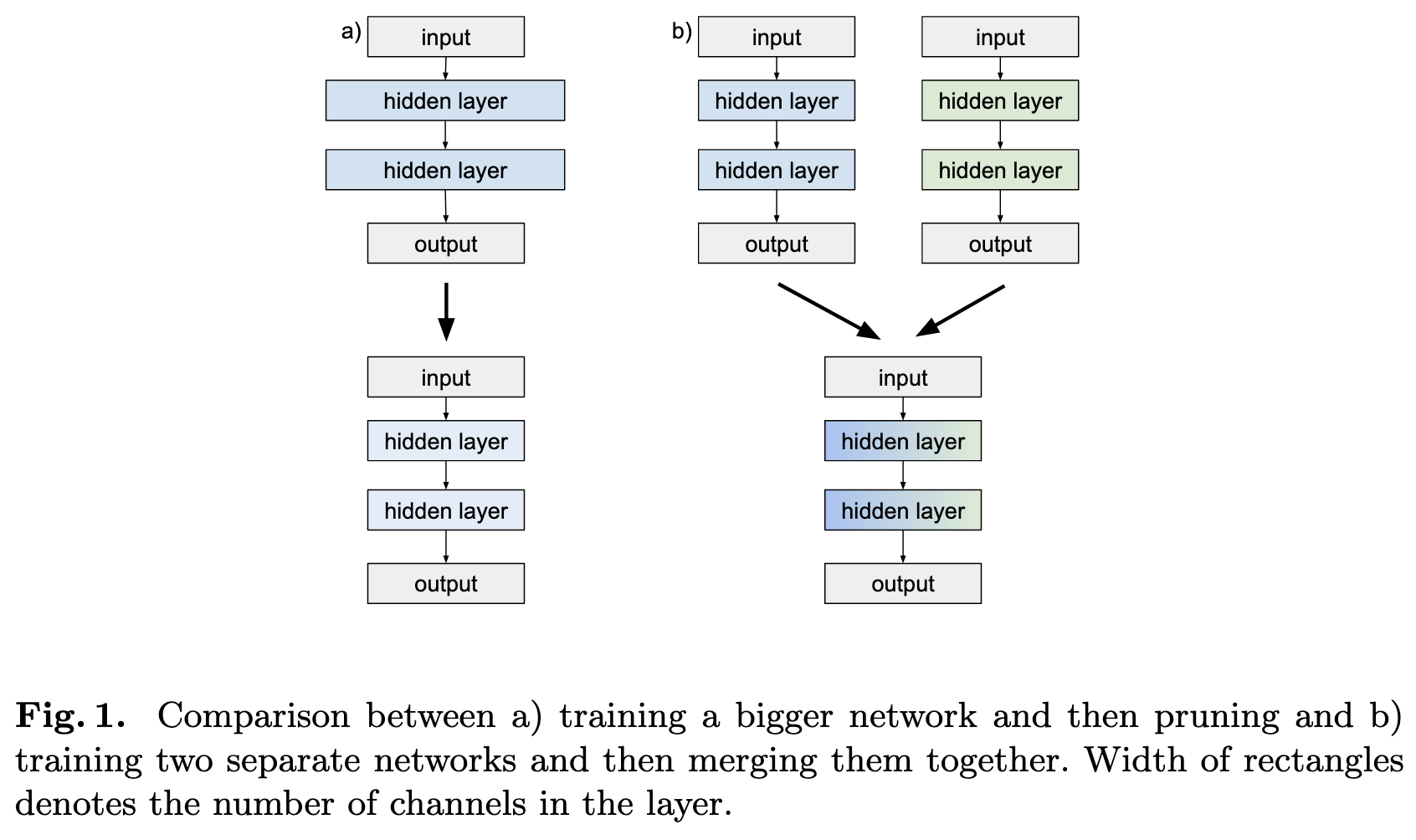

2022-4-24: Merging networks, Wall of MoE papers, Diverse models transfer better

8.8. Designing Convolution Network Architectures — Dive into Deep Learning 1.0.3 documentation

Epoch in Neural Networks Baeldung on Computer Science

Review of deep learning: concepts, CNN architectures, challenges, applications, future directions, Journal of Big Data

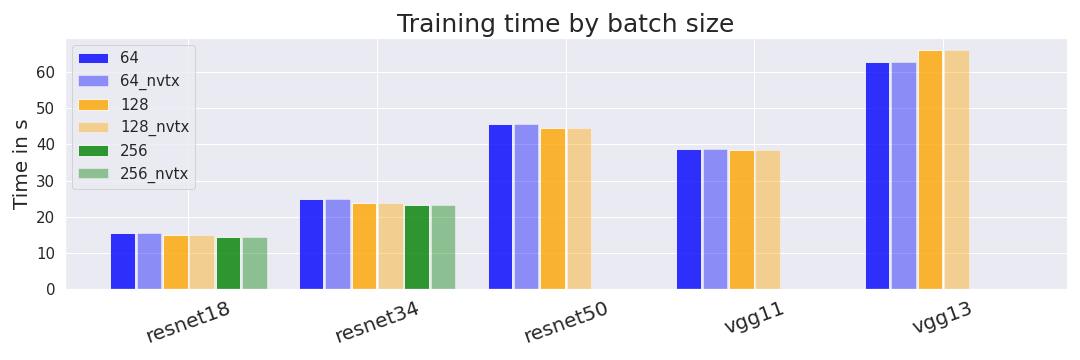

The base learning rate of Batch 256 is 0.2 with poly policy (power=2).

How to measure FLOP/s for Neural Networks empirically? — LessWrong

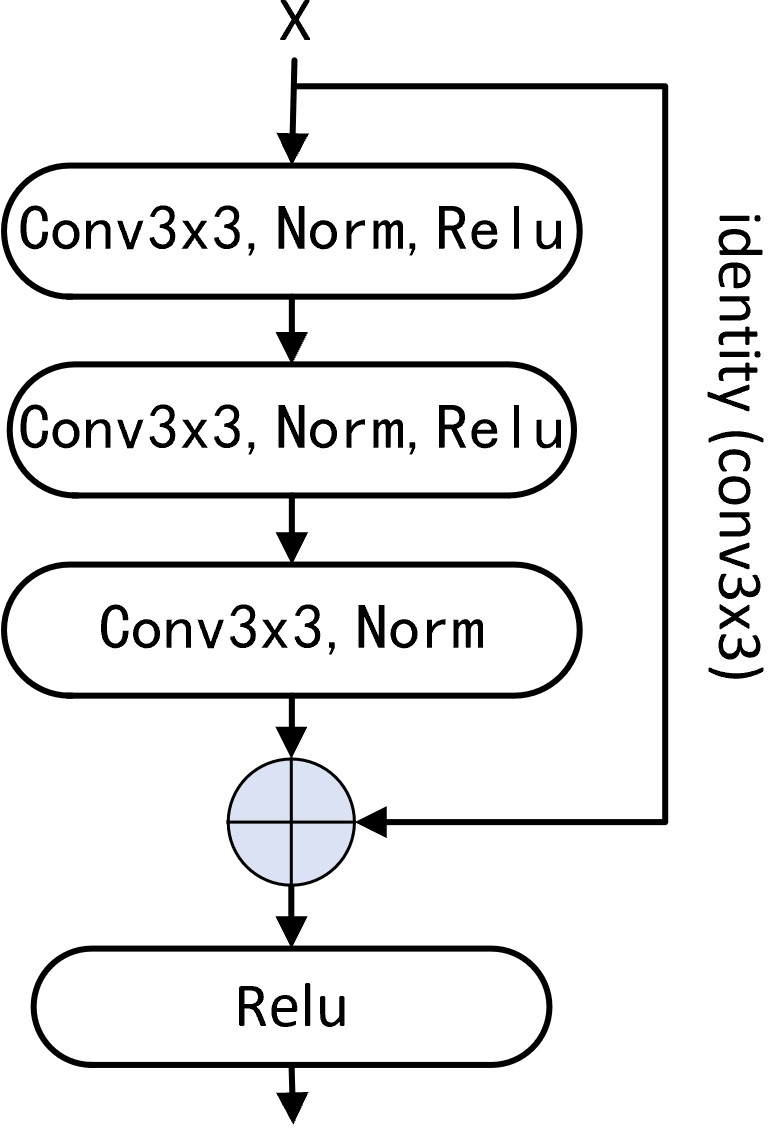

A novel residual block: replace Conv1× 1 with Conv3×3 and stack more convolutions [PeerJ]

Epoch Impact Report - 2022, PDF, Machine Learning

Deep Learning, PDF, Machine Learning

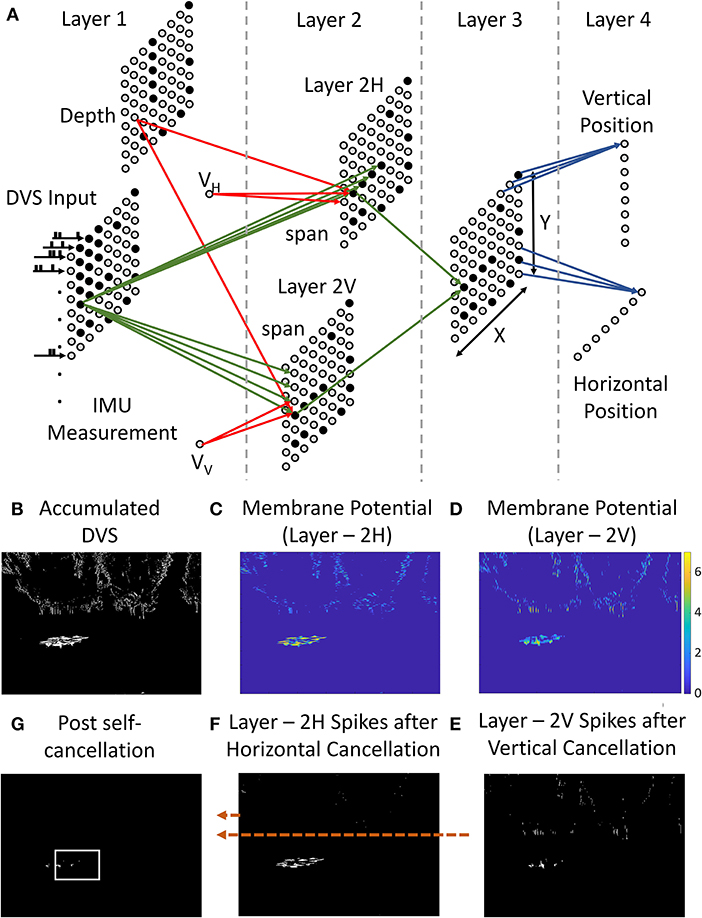

Frontiers Bio-mimetic high-speed target localization with fused frame and event vision for edge application